< RESOURCES / >

Software Test Automation: A Practical Guide to Faster, Safer Releases

At its core, software test automation is about using specialized tools to execute pre-scripted tests. Instead of a human manually clicking through every feature, a script validates the application's behavior. The business outcome is clear: you get faster feedback on code quality, broader test coverage, and a significant reduction in human error, which directly lowers the risk of shipping critical bugs.

This guide provides a consultant's view on building a software test automation strategy that delivers real business value, helping you reduce costs, accelerate time-to-market, and mitigate operational risk.

Why Manual Testing Creates a Bottleneck

In modern development cycles, relying solely on manual testing is a significant business liability. It's slow, prone to error, and simply doesn't scale with the speed required for continuous delivery.

The core issue is regression testing. As your application grows, the list of existing features that need re-testing after every change expands exponentially. This manual effort quickly becomes a bottleneck, delaying releases and consuming valuable engineering time that could be spent on innovation.

The Business Impact of Inefficiency

These testing bottlenecks directly impact key business metrics. Slower release cycles mean a slower time-to-market, giving competitors an advantage. Furthermore, the repetitive nature of manual regression testing leads to burnout and increases the likelihood of human error, allowing costly defects to reach customers.

Relying on manual regression testing is like paving the same road after every rainstorm. A strategic software test automation framework builds a highway—it enables fast, reliable, and scalable delivery.

The goal isn't to replace skilled QA engineers but to augment them. By automating repetitive checks, you free up your quality experts to focus on high-value activities that require human intuition:

- Exploratory Testing: Creatively investigating the application to uncover non-obvious defects.

- Usability Testing: Assessing the genuine user experience from a human perspective.

- Complex Scenario Analysis: Evaluating intricate business workflows where automation is impractical.

This strategic shift is driving significant market growth. The global automation testing market is projected to grow from $28.1 billion to $55.2 billion by 2028. You can read the full research on automation market trends for a deeper analysis. A well-executed test automation initiative isn't just a quality improvement project; it's a competitive advantage that enables confident and rapid innovation.

Building a Modern Software Test Automation Strategy

An effective software test automation strategy isn't about automating everything possible; it's about making strategic choices to automate the right things. A lack of planning often leads to brittle tests, wasted engineering hours, and a negative return on investment.

A solid strategy is built on maximizing efficiency, reducing business risk, and shortening the feedback loop for developers. Before writing any automation code, a plan is essential. This starts with a clear understanding of how to write effective test cases, as these form the blueprint for your automated scripts.

From there, the Test Pyramid provides a proven model for structuring your test suite.

The Test Pyramid as Your Architectural Blueprint

The Test Pyramid is a framework for balancing different types of automated tests to achieve an optimal mix of speed, cost, and reliability. It advocates for a large base of fast, low-cost tests and a small number of slow, high-cost tests at the top.

Unit Tests (The Foundation): The majority of your tests should be here. Unit tests verify individual functions or components in isolation. They execute in milliseconds and pinpoint the exact location of a failure, providing immediate feedback to developers. A strong unit test foundation ensures the basic building blocks of your application are sound.

Integration Tests (The Middle Layer): These tests verify that different modules or services work together as expected. For example, does your application correctly interact with the database? Integration tests are slower than unit tests but are crucial for catching defects that occur at the boundaries between system components.

End-to-End (E2E) Tests (The Peak): At the top, E2E tests simulate a full user journey through the application's UI. They are essential for validating critical business flows, like a checkout process. However, they are the slowest, most brittle, and most expensive tests to maintain. A sound strategy minimizes their use, focusing only on the most critical, revenue-impacting user paths.

Expanding Beyond the Pyramid

While the pyramid is an excellent foundation, a comprehensive software test automation strategy must also incorporate non-functional tests that are critical for business success, particularly in regulated industries.

Consider these additional layers:

- API Testing: APIs are the backbone of modern applications. Robust API testing ensures your system integrations remain stable, protecting revenue streams and partner relationships.

- Performance Testing: This practice identifies system bottlenecks before they impact users. Simulating user load helps prevent costly outages during peak traffic, which directly protects revenue and brand reputation.

- Security Testing: Automated security scans can identify common vulnerabilities early in the development lifecycle. This is non-negotiable for maintaining compliance (e.g., GDPR, SOC 2) and protecting against data breaches.

Adopting a "Shift-Left" Mindset

The guiding principle for modern quality assurance is "shifting left"—the practice of moving testing activities earlier in the development lifecycle.

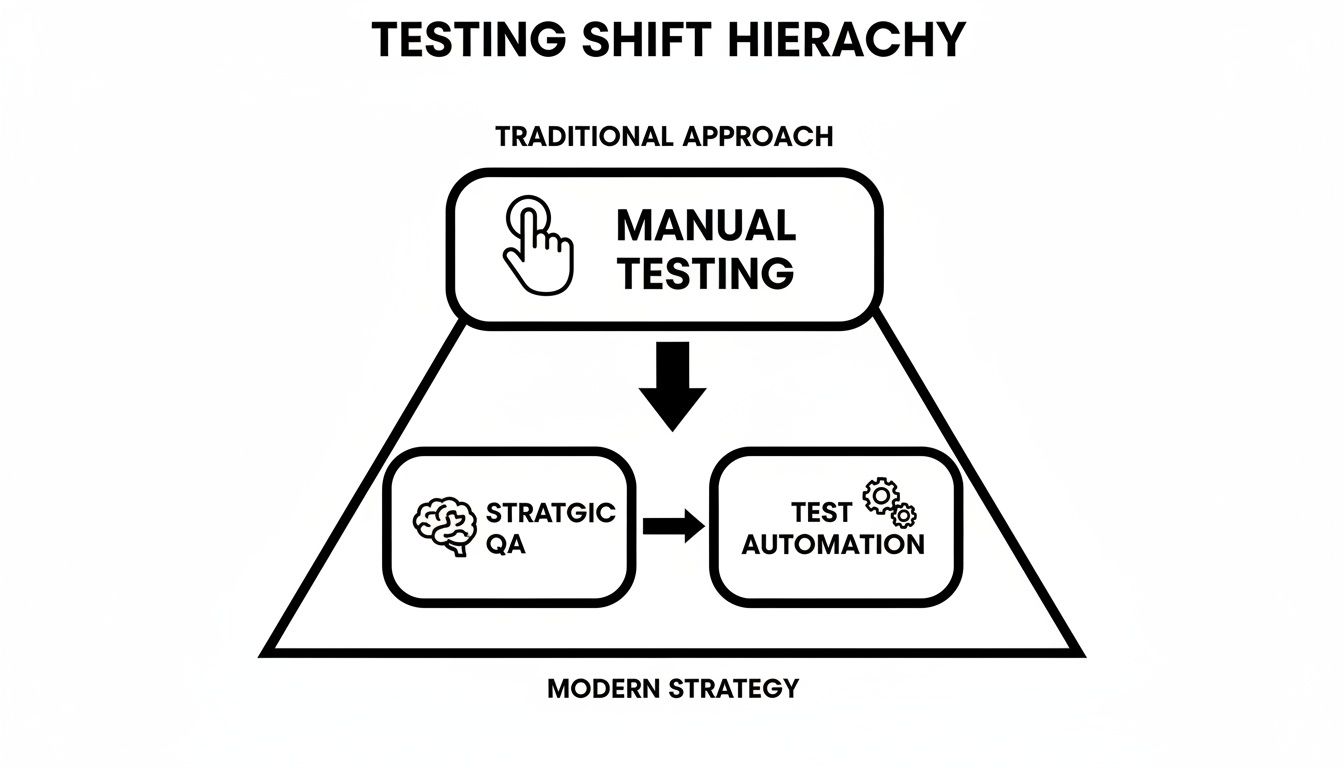

This graphic illustrates the evolution from traditional, late-stage QA to a proactive, integrated approach where quality is a shared responsibility.

Shifting left means quality is no longer the sole responsibility of a QA team at the end of the process. Instead, it becomes a continuous, collaborative effort.

By integrating automated tests into your CI/CD pipeline, developers receive immediate feedback on every code commit. Bugs are found and fixed when they are cheapest to resolve—moments after they are introduced. You can learn more about this integrated approach in our guide to Q&A testing. This proactive methodology not only reduces risk but also enables your team to deliver higher-quality software at a faster pace.

Choosing the Right Tools and Frameworks

Selecting the right tool for your software test automation initiative is a critical decision that can accelerate your efforts or saddle you with long-term technical debt. The optimal choice is not simply the most popular tool but the one that best aligns with your team's skills, technology stack, and business objectives.

The right framework can significantly shorten time-to-market, reduce the cost of quality, and prevent production failures that erode customer trust and impact revenue. This makes tool selection a strategic, not just a technical, decision.

Define Your Requirements First

Before evaluating specific tools, it's essential to understand your internal ecosystem. The ideal solution is context-dependent.

Ask these key questions:

- Technology Stack Compatibility: Does the tool integrate seamlessly with your application's architecture? For a React-based front end, a modern JavaScript framework like Playwright or Cypress will likely be a more efficient choice than a legacy tool.

- Team Skillset: What programming languages are your engineers proficient in? Forcing a Python team to adopt a Java-based framework introduces friction and slows down adoption. Leveraging existing skills—such as JavaScript for Cypress or Python for Selenium—accelerates implementation.

- Scalability Needs: Will the tool support your growth? Consider its capabilities for parallel test execution, integration with cloud-based testing grids, and its ability to handle increasing application complexity.

- CI/CD Integration: How easily does the tool integrate with your existing pipeline (e.g., GitHub Actions, Jenkins, GitLab CI)? Seamless integration is non-negotiable for enabling a fast feedback loop.

Open-Source vs. Commercial Platforms

A primary decision point is whether to use a free, open-source framework or a paid, commercial platform. This is a classic trade-off between upfront cost, internal effort, and access to dedicated support.

Open-source tools like Selenium and Playwright offer immense flexibility and are backed by large communities. Their primary advantage is the absence of licensing fees. However, this comes at the cost of requiring your team to handle all setup, configuration, and ongoing maintenance.

Commercial platforms from vendors like TestComplete or Katalon provide all-in-one solutions with dedicated support, pre-built reporting dashboards, and features like record-and-playback or AI-assisted maintenance. These can significantly accelerate time-to-value, especially for teams without deep automation expertise. The trade-off is the recurring subscription cost.

Market trends show a blend of approaches. For example, some development teams are leveraging AI-driven frameworks to manage the growing 58.5% share of dynamic testing but are customizing them to meet strict security requirements. You can discover more insights about the automation testing market on snsinsider.com.

The choice between open-source and commercial tooling is a "build vs. buy" decision. It balances the direct cost of licensing against the indirect costs of internal engineering time and the business value of professional support.

This table breaks down the core trade-offs.

Automation Framework Selection Criteria

Ultimately, the best tool is the one that fits your context. By starting with your needs and aligning the choice with your business goals, you can select a software test automation solution that acts as a business accelerator.

Integrating Automation into Your CI/CD Pipeline

The true value of software test automation is realized when it is seamlessly integrated into your Continuous Integration and Continuous Delivery (CI/CD) pipeline. This transforms testing from a separate, periodic event into an automated, continuous quality gate woven directly into the development process.

This integration creates an immediate feedback loop for your development team. To leverage this effectively, a solid understanding of Agile and DevOps methodologies is essential.

In a well-configured pipeline, every code commit automatically triggers a sequence of events: the software is built, and a suite of unit and integration tests is executed. This serves as an automated quality check.

How an Automated Quality Gate Delivers Value

The mechanism is straightforward and highly effective. If all automated tests pass, the build is considered stable and proceeds to the next stage, such as deployment to a staging environment. If any test fails, the pipeline halts immediately.

This immediate failure is a feature, not a bug. The developer responsible for the change receives instant notification that their commit introduced a regression. The flawed build is rejected, preventing the defect from propagating to other environments or team members.

A CI/CD pipeline with integrated test automation acts as a first line of defense, ensuring that every change is validated against a known quality standard before it can cause downstream issues.

The business impact is significant. This process dramatically reduces the risk of production failures, which in turn protects revenue, customer satisfaction, and brand reputation. It also accelerates time-to-market by ensuring only validated code progresses through the pipeline, eliminating wasteful manual checks and rework. This efficiency gain is a key driver of market growth; for instance, the Hungary Industrial Automation Market is forecast to grow at a CAGR of 10.2% from 2024 to 2030.

The Power of Shifting Left

Integrating automation into the CI/CD pipeline is the practical application of the "shift-left" philosophy. By catching defects moments after they are introduced, you address them at the point where they are cheapest and easiest to fix. The developer's context is still fresh, enabling a rapid resolution.

This contrasts sharply with traditional models where testing might occur weeks later, long after the developer has moved on to other tasks. The cost of fixing a bug at that late stage is exponentially higher due to context switching, investigation, and potential architectural rework.

A typical automated pipeline workflow includes these steps:

- Code Commit: A developer pushes code to the central repository.

- Pipeline Trigger: The CI server (e.g., GitHub Actions, Jenkins) detects the change and initiates a build.

- Build and Unit Test: The application is compiled, and fast-running unit tests verify individual components.

- Integration Test: If unit tests pass, integration tests run to validate interactions between services and data stores.

- Quality Gate Decision: The pipeline proceeds or fails based on test outcomes. A failure triggers an immediate notification.

- Deployment (on pass): A successful build is automatically deployed to the next environment in the chain.

This workflow ensures your software is always in a deployable state, enabling you to release features with speed and confidence. In regulated sectors like fintech, this automated validation is also critical for demonstrating compliance and security, which can be complemented by practices like penetration testing as a service.

Measuring the ROI of Your Automation Efforts

How do you justify the investment in software test automation to business stakeholders? It's not enough to report that tests are running faster. To demonstrate value, you must connect technical improvements to business outcomes like cost reduction, risk mitigation, and revenue enablement.

Calculating the return on investment (ROI) is essential for framing automation as a strategic asset rather than a cost center. The right metrics tell a story that resonates with the entire leadership team, from the CTO to the CFO.

Key Metrics That Demonstrate Business Value

To build a compelling business case, focus on metrics that reflect business impact, not just technical activity. Avoid vanity metrics like "number of automated tests," which are meaningless without context.

Here are three KPIs that demonstrate the tangible impact of a well-executed automation strategy:

- Defect Escape Rate: This measures the percentage of defects that are discovered by users in production rather than by internal testing. A declining defect escape rate is direct evidence that your automation is effectively catching critical issues before they can impact customers, damage your brand, or cause revenue loss.

- Mean Time to Recovery (MTTR): When a production issue does occur, how quickly can you deploy a fix? A low MTTR indicates a resilient and reliable delivery pipeline, where automated testing gives you the confidence to release fixes rapidly. This metric speaks directly to operational stability and customer satisfaction.

- Test Suite Execution Time: This is the time required to get feedback on a new build. Reducing this cycle time from hours to minutes directly accelerates your time-to-market. Faster feedback enables faster iteration, allowing the business to ship features and respond to market changes more quickly than competitors.

A Simple Framework for Calculating ROI

While a detailed financial model can be complex, you can construct a strong business case by comparing the costs of automation against its financial benefits. This is a core discipline of effective software project management.

Calculating ROI translates technical achievements into the language of business: reduced costs, mitigated risks, and accelerated revenue generation.

Use this straightforward framework:

- Calculate Total Investment: Sum all associated costs, including software licenses, initial setup and training hours, and the ongoing engineering time for maintaining the test suite.

- Reduced Manual Effort: Calculate the hours your team would have spent on manual regression testing and multiply by the loaded cost of a QA engineer. This represents a direct cost saving.

- Cost of Escaped Defects: Quantify the cost of production bugs, including support time, emergency engineering effort, and the estimated impact on customer churn. Multiply this by the reduction in your defect escape rate.

- Faster Time-to-Market: Estimate the additional revenue generated by releasing a key feature weeks or months earlier than would have been possible with manual testing.

- Critical Business Paths: Core user journeys that directly generate revenue, such as checkout flows or user registration.

- High-Risk Functionality: Features where failure would have severe consequences, such as payment integrations or data security components.

- Repetitive Regression Tests: Stable, predictable tests that are ideal candidates for automation, freeing up manual testers for more valuable work.

- Exploratory testing: Intelligently probing the application to find obscure, edge-case bugs.

- Usability testing: Evaluating the user experience from a human perspective.

- Complex scenario analysis: Assessing intricate workflows that mimic real-world user behavior.

- Timing Issues: The test script is not correctly waiting for the application to be ready.

- Test Data Dependencies: The test relies on specific data that is not consistently available.

- Environment Instability: The test environment itself is unreliable.

Presenting these figures transforms the discussion from a technical one to a strategic one, clearly demonstrating that software test automation is not an expense but an engine for business growth.

Common Pitfalls and How to Avoid Them

Many software test automation initiatives begin with high expectations but ultimately fail to deliver strategic value, becoming another source of technical debt. This outcome is often the result of common, avoidable mistakes.

By understanding these pitfalls, you can ensure your investment yields a reliable, low-maintenance safety net that enables faster, more confident releases.

Pitfall 1: Chasing 100% Automation Coverage

Attempting to achieve 100% automation coverage is a common mistake driven by a misleading metric. This goal often leads to a bloated, brittle test suite that is expensive to maintain, as it forces the automation of low-value or unstable test cases.

Solution: Adopt a risk-based approach. Prioritize automation efforts where they will have the most significant business impact:

This focus ensures that automation delivers tangible value by protecting the most critical aspects of your business.

Pitfall 2: Treating Test Code as a Second-Class Citizen

Another frequent error is writing test code without adhering to software engineering best practices. This leads to a brittle test suite that breaks frequently with minor application changes, creating a constant maintenance burden.

Your test automation code is a software product in its own right. It requires the same level of discipline, architecture, and quality control as your production code.

Solution: Apply proper software engineering principles. Use stable element locators (e.g., data-testid attributes), implement design patterns like the Page Object Model (POM) to separate test logic from UI interaction, and enforce code reviews for all test scripts.

This upfront investment in code quality results in a resilient and scalable test suite that reduces long-term maintenance costs and allows your team to focus on delivering new features.

Frequently Asked Questions (FAQ)

Here are answers to common questions from technical leaders and product managers considering an investment in software test automation.

Will automation replace our manual testers?

No. This is a common misconception. The objective of software test automation is not to replace human testers but to augment their capabilities. Automation handles the repetitive, predictable regression checks, freeing up skilled QA professionals to focus on high-impact activities that machines cannot perform.

These activities include:

Automation elevates the role of QA from manual gatekeeping to strategic quality assurance.

What is a good target for test automation coverage?

There is no universal "magic number." Chasing 100% test automation coverage is a counterproductive goal that leads to diminishing returns. The optimal level of coverage depends on your product's specific risk profile.

A pragmatic, risk-based approach is more effective. Focus automation on the areas that pose the greatest risk to the business: critical user journeys, core functionality, and high-stakes integrations. A common and healthy target is to automate 70-80% of the regression suite. This provides a strong safety net while leaving capacity for essential manual and exploratory testing to catch issues that automation would miss.

How should we handle flaky or unreliable tests?

A "flaky" test—one that passes and fails intermittently without any code changes—is a critical issue that erodes trust in your automation suite. It should be treated as a high-priority bug.

The first step is to quarantine the flaky test by removing it from your main CI/CD pipeline to prevent it from blocking valid builds. Once isolated, investigate the root cause, which is typically one of three issues:

To prevent flakiness, build resilient tests from the start by using explicit waits instead of fixed delays and employing stable selectors for UI elements.

Ready to build a software test automation strategy that accelerates delivery and reduces risk? The expert engineers at SCALER Software Solutions Ltd can augment your team with the specialized skills needed to build a resilient, scalable, and value-driven automation framework.

Request a proposal today and discover how our deep expertise in fintech and cloud technologies can help you ship better software, faster.

< MORE RESOURCES / >

Fintech

A Guide to Epic Store Szeged and the Local Tech Scene

Fintech

A Guide to Designing a Nordic Light Office for Technical Teams

Fintech

A Tech Employer's Guide to Hungary's Social Security System

Fintech

How to Choose an IT Company That Drives Business Outcomes

Fintech

A Consultant's Guide to Part-Time Jobs in Budapest

Fintech

A Consultant's Guide to Peppol Integration for E-Invoicing

Fintech

A Tech Leader's Guide to Nearshore Development

Fintech

PSD2 Integration for CTOs: Real-World Challenges and Architecture Insights

Team augmentation

Hiring vs. Outsourcing: Finding the Right Balance with Team Augmentation

Fintech