< RESOURCES / >

A Production-Ready Guide to Databricks and Airflow Integration

Integrating Databricks and Airflow creates a robust, enterprise-grade data platform. Airflow acts as the central orchestrator for complex, multi-system workflows, while Databricks provides the high-performance engine for data processing and analytics. This combination delivers both operational control and computational power.

This guide provides a practical framework for connecting these tools, focusing on security, cost-efficiency, and operational maturity. We will cover the business case, secure connection patterns, DAG design, cluster optimization, and CI/CD practices that separate production-ready systems from proof-of-concepts.

Why Integrate Databricks and Airflow?

Connecting Databricks and Airflow is a strategic decision to accelerate data product delivery while reducing operational overhead. Many data teams struggle with siloed orchestration, where Databricks jobs run independently from other business workflows. This fragmentation often leads to delayed insights, rising maintenance costs, and significant compliance risks.

The primary business driver is to create a unified, observable, and resilient data ecosystem. Instead of managing multiple schedulers, teams can centralize their entire workflow—from data ingestion and transformation to machine learning model training and API notifications—under a single orchestration layer.

The Strategic Advantage Beyond Tooling

The value extends beyond simply triggering a Databricks job. To fully leverage this integration, it’s important to understand the role of orchestration in cloud computing. Airflow’s core strength is managing dependencies across disparate systems, a common reality in modern enterprise environments.

This integration empowers teams to:

- Accelerate Time-to-Market: Automating end-to-end pipelines allows teams to deliver data products and insights faster.

- Lower Total Cost of Ownership: Centralizing orchestration logic eliminates the need for multiple schedulers and brittle custom scripts that are costly to maintain.

- Improve Governance and Compliance: A single point of control provides a clear, auditable trail for all data processing activities.

- Increase Reliability: Airflow’s built-in retry mechanisms and error handling make data pipelines more resilient to transient failures.

A common challenge is a Databricks-only workflow that must wait for a file on an external SFTP server or trigger a process in a legacy on-premise system. Airflow bridges these gaps, preventing teams from building custom, high-maintenance solutions that are prone to failure.

Measurable Business Outcomes

Adopting an integrated approach delivers tangible results. For example, regional case studies in Central and Eastern Europe show that organizations moving from disjointed, DIY setups to managed Databricks services orchestrated by Airflow can reduce median developer onboarding time from six weeks to two weeks. Additionally, operational incident rates often decrease by up to 40% within the first year, translating directly into productivity gains.

Ultimately, combining Databricks and Airflow allows engineering teams to focus on delivering business value instead of managing infrastructure. Strategic support, such as our team augmentation services, can help bridge skill gaps and accelerate these initiatives.

Establishing a Secure and Scalable Connection

Connecting Databricks to Airflow is the foundation of your orchestration strategy. A poorly configured connection can introduce security vulnerabilities and operational risks that disrupt data pipelines. For any enterprise data platform, establishing a secure connection from day one is non-negotiable.

Many tutorials suggest using a personal access token (PAT), but this approach is unsuitable for production. PATs are tied to individual users, creating a security risk if an employee leaves or a token is compromised. A resilient, auditable, and secure setup requires a more robust method.

Choosing Your Authentication Method

The appropriate authentication method depends on your organization's security policies, compliance requirements (e.g., SOC 2, GDPR), and operational maturity. Each method represents a trade-off between ease of setup, security posture, and management effort. The goal is to select a method that supports automation, adheres to the principle of least privilege, and simplifies credential rotation.

Authentication Methods for Airflow and Databricks

Deciding how Airflow authenticates with Databricks is a critical security decision. The table below outlines common options, ordered from least to most secure, to help you select the right approach for your environment.

Service principals are the baseline for any production deployment. While PATs are acceptable for local development, they should not be used in systems handling sensitive corporate data.

Your connection strategy must be auditable and automated from the start. Relying on manually created, long-lived tokens tied to individual user accounts creates security incidents and operational instability. Service principals provide the necessary foundation for a secure, production-grade system.

Implementing Service Principals with Airflow

To set up service principals, first create one in your cloud provider's identity service (e.g., Azure Active Directory). Next, grant it the minimum required permissions in your Databricks workspace, such as ‘Can Restart’ on a specific cluster.

Finally, generate credentials (a client ID and secret) and store them securely in your Airflow secrets backend, such as Azure Key Vault or HashiCorp Vault. Never hardcode credentials in your DAGs or configuration files.

This approach reduces maintenance overhead by enabling automated credential rotation and centralized policy enforcement. A 2023 survey of over 120 data teams in the CEE region found that while 57% self-host Airflow, 64% cited maintenance as a primary challenge. Proper connection strategies help mitigate this burden.

The diagram below illustrates the choice between building a custom platform and adopting a more integrated solution.

While a DIY approach offers flexibility, it also increases operational workload, making secure and standardized integration patterns essential. To identify potential vulnerabilities in your setup early, consider a security assessment like penetration testing as a service.

Designing Practical DAGs for Databricks Jobs

Once the connection is secure, the next step is building workflows that solve business problems. The goal is not just to trigger scripts but to design Directed Acyclic Graphs (DAGs) that are modular, reusable, and easy to maintain. Well-designed DAGs lead to lower development costs, faster incident resolution, and more reliable data pipelines.

When orchestrating Databricks with Airflow, you will primarily use operators from the official Databricks provider. The DatabricksRunNowOperator is essential, as it allows you to trigger an existing Databricks Job. This is the recommended production pattern because it separates orchestration logic in Airflow from execution logic in Databricks. This separation keeps your Airflow environment focused on scheduling and dependency management, while the heavy data processing remains within the optimized Databricks environment.

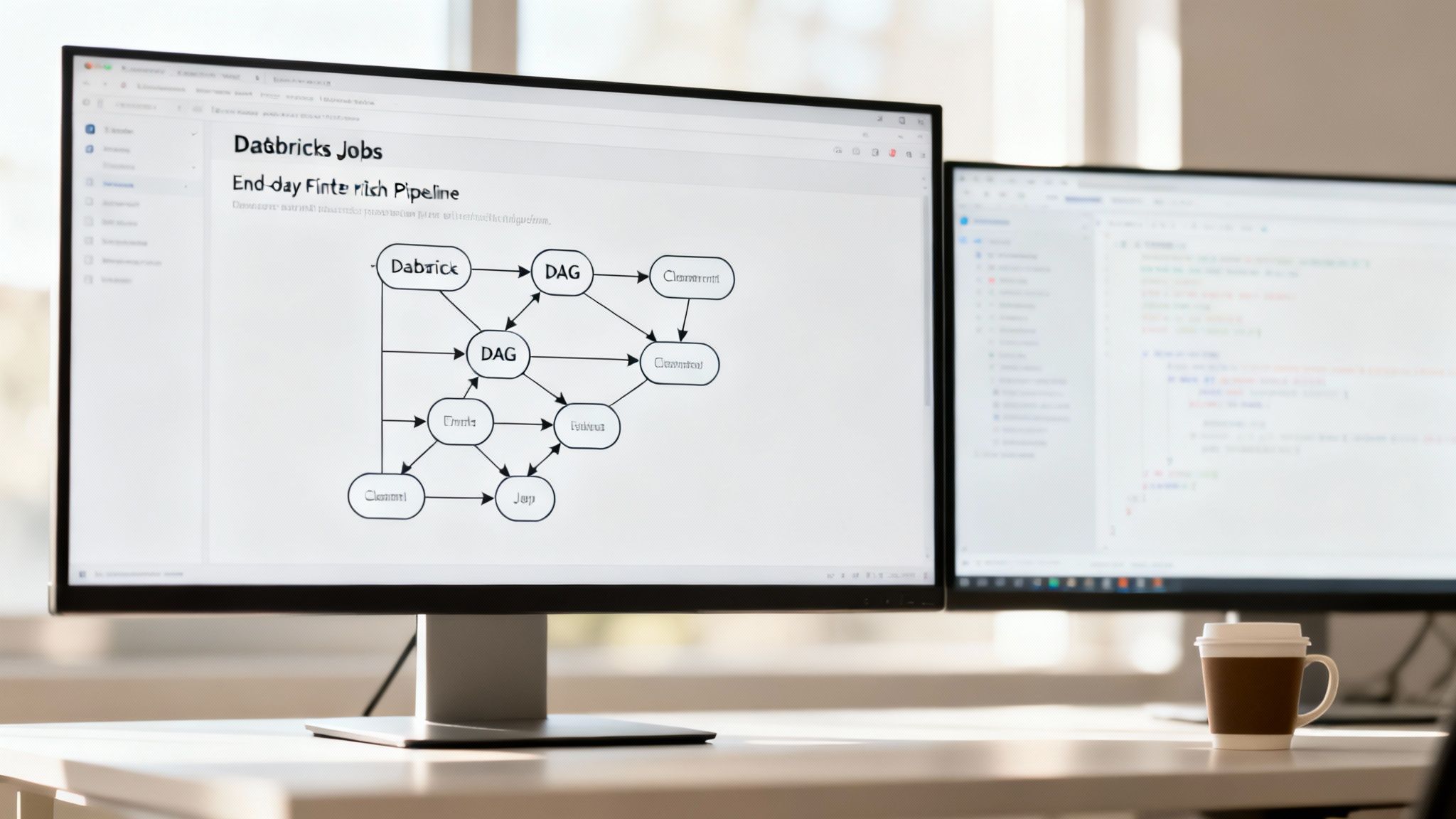

A Practical Fintech Scenario: End-of-Day Risk Calculation

Consider a real-world fintech use case: an end-of-day risk calculation pipeline. This workflow must ingest trade data, aggregate market positions, run complex risk models, and load the results into a reporting database. This process consists of multiple dependent steps, making it an ideal candidate for Airflow orchestration.

A DAG for this pipeline could be structured as follows:

- Data Ingestion: A sensor task waits for a trade data file to arrive in cloud storage.

- Pre-processing in Databricks: Once the file is available, an Airflow task triggers a Databricks Job to ingest and clean the raw data.

- Risk Model Execution: A subsequent task triggers a separate Databricks Job to run the core risk calculation models, typically a compute-intensive notebook or Spark job.

- Result Loading: After the models complete, a final task triggers a Databricks Job to write the risk summary to a database for BI tools.

- Notifications: In parallel with the final step, a task can send a success or failure notification to a Slack channel.

This structure establishes a clear separation of concerns: Airflow manages the "what" and "when," while Databricks handles the "how."

Structuring DAGs for Modularity and Reuse

Avoid monolithic DAGs that consolidate all logic into a single file, as they become difficult to maintain. Instead, design for modularity from the start using features like Airflow’s TaskFlow API and Task Groups.

- Task Groups: Visually group related tasks in the Airflow UI. For the risk pipeline, you could create a

data_ingestionTask Group and arisk_modellingTask Group to make the DAG easier to read and debug. - Dynamic Task Generation: Instead of hardcoding tasks, generate them dynamically from a configuration file. For instance, if you need to process data for multiple regions (e.g., EMEA, APAC, AMER), you can create parallel tasks from a list, making your DAG adaptable to new requirements without code changes.

Treat your DAGs as configuration, not complex scripts. The heavy computational logic should be defined and tested within Databricks Jobs. Your Airflow DAG should serve as the glue that connects these jobs in the correct order with the necessary parameters.

Passing Parameters with XComs

Workflows are dynamic, and tasks often need to exchange information. For example, an ingestion task may need to pass a file path to a processing task. Airflow’s Cross-Communication (XComs) feature is designed for this purpose.

The DatabricksRunNowOperator can automatically push the URL of the Databricks job run as an XCom, which downstream tasks can use to check the job's status or retrieve logs.

However, use XComs judiciously. XComs are not designed for passing large datasets. They are intended for small pieces of metadata, such as file paths, record counts, or unique IDs. Attempting to pass a large DataFrame via XCom will overload the Airflow metadata database and can bring your scheduler to a halt. Use a dedicated storage layer like S3 or ADLS for passing large volumes of data between tasks.

By applying these design principles, you can build Databricks and Airflow pipelines that are not only powerful but also robust, maintainable, and cost-effective over the long term.

Ready to design and implement production-grade data pipelines that drive business results?

Request a proposal to accelerate your data engineering initiatives.

Optimizing Clusters for Performance and Cost

Connecting Databricks and Airflow is the first step. The next is ensuring that the clusters you launch are cost-effective. Poorly configured clusters are a common source of unnecessary cloud expenditure. Every cluster configuration decision is a trade-off between performance and cost. The objective is to find the optimal balance where pipelines execute efficiently without overprovisioning resources. This requires defining job-specific cluster configurations within your Airflow DAGs.

Right-Sizing Your Job Clusters

The most effective way to control costs is to use job clusters instead of all-purpose clusters. A job cluster is ephemeral: it is created for a single job run and terminates upon completion. This model ensures you only pay for the compute resources you use.

When defining clusters in the DatabricksRunNowOperator, you can specify the exact node type and worker count, tailoring the infrastructure to the workload.

- Memory-Optimized Instances: Ideal for Spark jobs with large shuffles, joins, or aggregations that would otherwise spill to disk and degrade performance.

- Compute-Optimized Instances: Best for CPU-intensive tasks like complex mathematical calculations or certain machine learning algorithms.

- General-Purpose Instances: A balanced option for workloads without a clear resource bottleneck.

By analyzing the Spark UI, you can identify resource bottlenecks and adjust cluster specifications accordingly. This practice can yield cloud savings of 30% or more by eliminating idle compute time.

Leveraging Autoscaling and Pools

Static cluster sizes are inefficient for workloads with variable resource demands. Databricks autoscaling is a powerful tool for cost management in these scenarios. By enabling autoscaling and setting minimum and maximum worker counts, you allow Databricks to dynamically adjust the number of nodes based on the current load. This provides the necessary resources during peak processing while avoiding costs for idle workers during lulls.

A key feature for improving performance is Databricks Pools. Pools maintain a set of idle, ready-to-use instances, reducing cluster start-up times from minutes to seconds. For frequent, short-lived jobs triggered by Airflow, this significantly reduces pipeline latency.

Instances in a pool still incur cloud provider costs while idle. The strategy is to use pools for your most common instance types, balancing faster start times against budget constraints.

Databricks Cluster Configuration Impact

The parameters you pass from Airflow directly affect operational cost and performance. The table below outlines how key configurations influence your bottom line and pipeline speed.

Cluster optimization is an ongoing process of monitoring, analysis, and refinement. By incorporating these practices into your Databricks and Airflow development lifecycle, you can build a platform that is both powerful and economically sustainable.

Ready to build cost-effective and high-performance data platforms?

Book a call with our data engineering experts to get started.

Building Enterprise-Grade CI/CD and Monitoring

A data pipeline’s value depends on its reliability. Once your Databricks and Airflow integration is operational, the focus must shift to operational excellence. This involves establishing a robust framework for monitoring, alerting, and deployment to minimize risk, improve developer productivity, and build trust in your data platform. Without this operational backbone, you risk silent failures, performance degradation, and data quality issues that can lead to poor business decisions and erode stakeholder confidence.

Proactive Monitoring and Alerting

Waiting for users to report stale dashboards is not a viable monitoring strategy. An enterprise-grade platform must proactively detect and report issues as they occur. Instrument both Airflow and Databricks to expose key operational metrics, then collect this data in tools like Prometheus and Grafana to create a unified dashboard for your entire data stack.

Key metrics to monitor include:

- Airflow Scheduler Health: Track DAG parsing times and scheduler heartbeats. Delays can indicate performance issues that will impact job execution.

- Task Durations and Failures: Monitor the execution time of individual Airflow tasks and Databricks jobs. Sudden spikes can signal data volume changes or performance regressions.

- Databricks Cluster Metrics: Observe cluster acquisition times and resource utilization. Slow start-up times can delay time-sensitive pipelines.

While dashboards are useful, they are passive. Active alerting is also necessary. Airflow's callback functions (on_failure_callback, on_success_callback) are ideal for this. You can write simple Python functions to send detailed alerts to services like Slack or PagerDuty, including direct links to failed task logs. This enables on-call teams to resolve incidents more quickly.

Implementing a Robust CI/CD Workflow

Manual deployments are slow, risky, and error-prone. Automating your deployment process with a Continuous Integration and Continuous Deployment (CI/CD) pipeline is essential for any modern data team. This enforces quality standards and ensures that only tested, reliable code reaches production.

A typical CI/CD workflow for a Databricks and Airflow project, using a tool like GitHub Actions, should automate several critical quality checks.

The purpose of CI/CD is not just automation; it is to build confidence in the deployment process. Every commit should automatically undergo a series of checks, confirming it is safe to deploy and reducing the time required to recover from any issues that arise.

For more information on setting up this type of automation, see these CI/CD pipeline best practices.

A Phased Deployment Strategy

Your CI/CD pipeline should enforce a clear, multi-environment deployment strategy. A common and effective model uses distinct development, staging, and production environments.

- Development: Developers test DAGs and Databricks notebooks in isolated environments. The CI pipeline runs on every push to a feature branch to catch errors early.

- Staging: Merging a feature into the main branch automatically deploys it to a staging environment, which should be a near-perfect replica of production. This allows for end-to-end integration testing with realistic data volumes.

- Production: The final deployment to production should be gated by a manual approval step. This human-in-the-loop approach provides a final safety check before changes impact the business.

Automating these steps significantly reduces deployment risk. Each code change is validated through linting, unit tests, and integration tests before deployment. For more on building these checks, refer to our guide on QA and testing methodologies. This structured process transforms deployments from high-stress events into routine, predictable activities, freeing your team to focus on delivering business value.

Common Questions on Databricks and Airflow

As teams implement Databricks and Airflow, several common questions arise. Moving from a simple DAG to a production pipeline uncovers practical challenges. Here are answers to the most frequent questions.

When Should I Use Databricks Workflows Instead of Airflow?

The choice between Databricks Workflows and Airflow depends on the scope of your pipeline.

- Use Databricks Workflows when your entire process is contained within the Databricks ecosystem. If you are chaining notebooks and jobs without external dependencies, this is a cleaner, more integrated solution.

- Use Airflow when you need to orchestrate tasks outside of Databricks. If your pipeline needs to wait for a file in S3, trigger a job in another cloud service, or pull data from a SaaS API, Airflow is the appropriate tool for cross-system coordination.

Many mature data teams adopt a hybrid model. Airflow serves as the high-level orchestrator for the business process, and one of its tasks is to trigger a more complex Databricks Workflow. This approach combines Airflow’s broad orchestration capabilities with the focused task management of Databricks.

What Is the Most Secure Way to Manage Secrets?

Storing credentials in the Airflow metadata database is a significant security risk. The best practice is to use a dedicated secrets management tool like HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault.

Configure the Airflow Secrets Backend to fetch credentials from your central vault at runtime. This centralizes secrets management, simplifies credential rotation, and provides a clear audit trail. For Databricks, the enterprise security standard is to use service principals with short-lived OAuth tokens, managed through the secrets backend. This eliminates the risks associated with long-lived, user-tied API tokens.

How Can I Handle Transient Failures in Databricks Jobs?

A single retry is insufficient for a truly resilient pipeline. A layered approach is more effective.

- Airflow-Level Retries: Configure retries on your Databricks operator in the DAG. Using an exponential backoff strategy (

retry_exponential_backoff=True) is recommended to avoid overwhelming a struggling system. This is effective for handling temporary network or infrastructure issues. - Application-Level Retries: Implement idempotent logic and internal retry loops within your Databricks code (e.g., Python or Scala notebook) for specific operations, such as calling an external API that may be temporarily unavailable.

Combining these two levels provides maximum resilience, allowing pipelines to recover automatically from most common failures and reducing the need for manual intervention.

What Are the Key Performance Metrics to Monitor?

Effective monitoring involves more than just watching for failures; it requires identifying performance bottlenecks before they impact business SLAs.

For a combined Databricks and Airflow stack, monitor the following:

- Airflow Metrics: Track DAG parsing times, task scheduling latency, and the number of queued tasks. An increase in these metrics may indicate that your scheduler is overloaded.

- Databricks Metrics: Monitor cluster acquisition time, job execution duration, and resource utilization (CPU/memory). These are critical for optimizing both performance and cost.

- Business-Critical Metric: The most important metric is end-to-end pipeline latency—the total time from DAG start to when clean data is available to stakeholders. Measuring this against your Service Level Agreements (SLAs) demonstrates the value and reliability of your data platform.

At SCALER Software Solutions Ltd, we specialize in building secure, scalable, and cost-effective data platforms. Our expert engineers can help you design and implement a production-ready Databricks and Airflow integration that accelerates your data initiatives.

< MORE RESOURCES / >

Fintech

A Guide to Epic Store Szeged and the Local Tech Scene

Fintech

A Guide to Designing a Nordic Light Office for Technical Teams

Fintech

A Tech Employer's Guide to Hungary's Social Security System

Fintech

How to Choose an IT Company That Drives Business Outcomes

Fintech

A Consultant's Guide to Part-Time Jobs in Budapest

Fintech

A Consultant's Guide to Peppol Integration for E-Invoicing

Fintech

A Tech Leader's Guide to Nearshore Development

Fintech

PSD2 Integration for CTOs: Real-World Challenges and Architecture Insights

Team augmentation

Hiring vs. Outsourcing: Finding the Right Balance with Team Augmentation

Fintech